The batch processing is very straightforward just by providing the audio file to process and describing its format the API returns the best-matching text, together with the recognition accuracy. The API, still in alpha, exposes a RESTful interface that can be accessed via common POST HTTP requests. An Outline of the Google Cloud Speech API Now that such technology will be accessible as a cloud service to developers, it will allow any application to integrate speech-to-text recognition, representing a valuable alternative to the common Nuance technology (used by Apple’s Siri and Samsung’s S-Voice, for instance) and challenging other solutions such as the IBM Watson speech-to-text and the Microsoft Bing Speech API. Speech-to-text features are used in a multitude of use cases including voice-controlled smart assistants on mobile devices, home automation, audio transcription, and automatic classification of phone calls. The neural network is updated as new speech samples are collected by Google, so that new terms are learned and the recognition accuracy keeps on increasing.

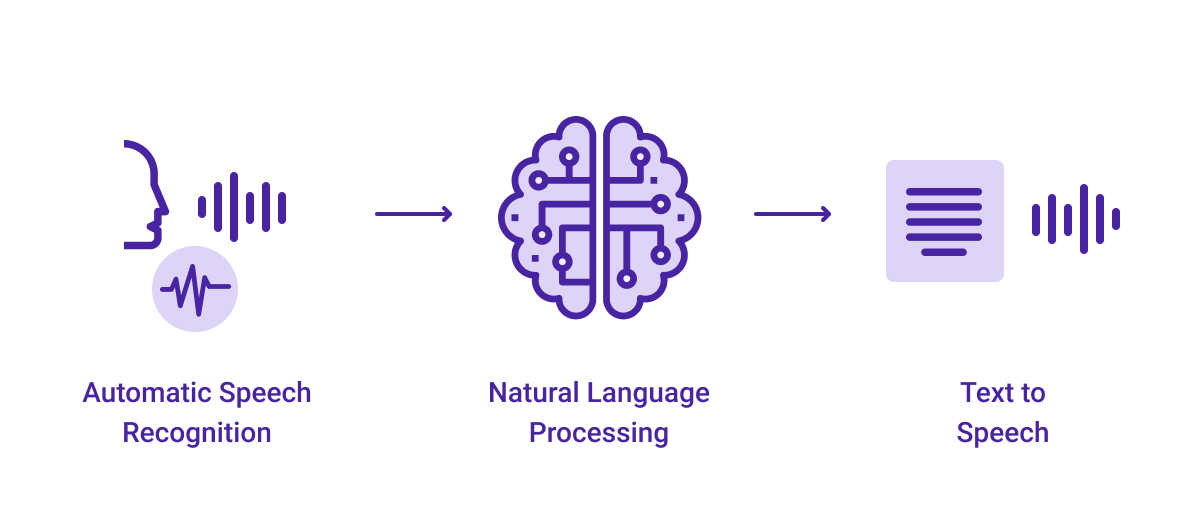

The capability to convert voice to text is based on deep neural networks, state-of-the-art machine learning algorithms recently demonstrated to be particularly effective for pattern detection in video and audio signals. This speech recognition technology has been developed and already used by several Google products for some time, such as the Google search engine where there is the option to make voice search. Google recently opened its brand new Cloud Speech API – announced at the NEXT event in San Francisco – for a limited preview. You can try to create a list of frequently used phrases and add that in the STT and check if there is an improvement in the outcome.Īlso, the audio channels that you are trying to transcribe, if it contains multiple channels, this document can be useful as well.Discover the Strengths and Weaknesses of Google Cloud Speech API in this Special Report by Cloud Academy’s Roberto Turrin Refer to the list of supported class tokens to see which tokens are available for your language.Īpart from that, one other approach to improve the STT accuracy can be by addition of common phrases (single and multi-word) in the phrases field of a PhraseSet object. Please note there are predefined classes available and to use a class in model adaptation, include a class token in the phrases field of a PhraseSet resource.

A class allows you to improve transcription accuracy for large groups of words that map to a common concept, but that don't always include identical words or phrases. This concept is part of model adaptation technique where classes represent common concepts that occur in natural language, such as monetary units and calendar dates. I would also like to draw your attention to the improvement of STT by use of classes. The enhanced model can provide better results at a higher price (although you can reduce the price by opting into data logging). By default, STT has two types of phone call model that you can use for speech recognition, a standard model and an enhanced model.

You can also make use of the enhanced models to improve the quality of STT.Please check the following link for more information. It improves the transcription results by helping Speech-to-Text recognize specific words or phrases more frequently than other options that might otherwise be suggested.Īlso in context to the technique there is a model adaptation boosting feature which can be pretty useful for fine-tuning the biasing of the recognition model. You can improve the transcription results by using “ Model adaptation” techniques. Please find below a description of the techniques: In context to your question I consulted the documentation and found some techniques that can perhaps be helpful for your problem statement.

0 kommentar(er)

0 kommentar(er)